基于LSTM的气候预测:构建可扩展的时间序列模型

气候数据本质上是时间序列数据——温度趋势、降雨模式和季节变化都遵循复杂的时间依赖关系。在这个项目中,我构建了一个基于长短期记忆网络(LSTM)的模型,使用历史数据来预测气候变量,展示了循环神经网络(RNN)在捕捉序列模式方面的优势。

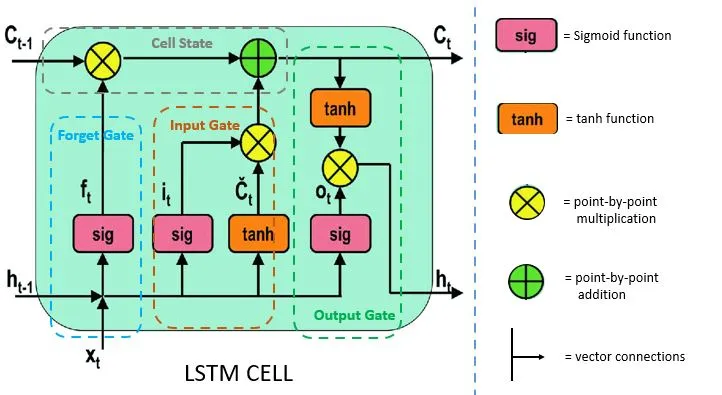

模型架构

设置环境

在深入数据之前,让我们通过导入必要的库来设置我们的环境。我们将使用流行的数据科学库,如NumPy、Pandas和Matplotlib,以及TensorFlow来构建我们的LSTM模型。

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import plotly.express as px

from sklearn.preprocessing import StandardScaler

from tensorflow.keras.models import Sequential, load_model

from tensorflow.keras.layers import LSTM, Dense, Dropout

from tensorflow.keras.preprocessing.sequence import TimeseriesGenerator

import tensorflow as tf

import os

plt.style.use('fivethirtyeight')

import warnings

warnings.filterwarnings('ignore')

加载和探索数据集

Jena气候数据集可以从TensorFlow的数据集仓库中获取。我们将下载并加载该数据集,然后快速查看其结构。

zip_path = tf.keras.utils.get_file(

origin='https://storage.googleapis.com/tensorflow/tf-keras-datasets/jena_climate_2009_2016.csv.zip',

fname='jena_climate_2009_2016.csv.zip',

extract=True)

extracted_dir = zip_path.replace('.zip', '')

csv_file_path = os.path.join(extracted_dir, 'jena_climate_2009_2016.csv')

df = pd.read_csv(csv_file_path)

df.head(2)python

输出

| Index | Feature | Format | Description |

|-------|-----------------|-------------------|-----------------------------------------------------------------------------------------------|

| 1 | Date Time | `01.01.2009 00:10:00` | Date-time reference |

| 2 | p (mbar) | `996.52` | Atmospheric pressure in millibars |

| 3 | T (degC) | `-8.02` | Temperature in Celsius |

| 4 | Tpot (K) | `265.4` | Temperature in Kelvin |

| 5 | Tdew (degC) | `-8.9` | Dew point temperature in Celsius (absolute water content in air) |

| 6 | rh (%) | `93.3` | Relative humidity (%), indicating how saturated the air is with water vapor |

| 7 | VPmax (mbar) | `3.33` | Saturation vapor pressure (maximum vapor pressure at a given temperature) |

| 8 | VPact (mbar) | `3.11` | Actual vapor pressure |

| 9 | VPdef (mbar) | `0.22` | Vapor pressure deficit (difference between saturation and actual vapor pressure) |

| 10 | sh (g/kg) | `1.94` | Specific humidity (mass of water vapor per unit mass of air) |

| 11 | H2OC (mmol/mol) | `3.12` | Water vapor concentration (moles of water vapor per mole of air) |

| 12 | rho (g/m³) | `1307.75` | Air density |

| 13 | wv (m/s) | `1.03` | Wind speed |

| 14 | max. wv (m/s) | `1.75` | Maximum wind speed |

| 15 | wd (deg) | `152.3` | Wind direction in degrees

数据清洗和预处理

数据清洗对于确保模型质量至关重要。我们将首先从将列名中的空格替换为下划线开始,并处理缺失值。

df.columns = df.columns.map(lambda x: x.replace(' ', '_').replace('.', ''))

cols = list(df.columns)

for col in cols:

unique_count = df[col].nunique()

most_common_value = df[col].mode().values[0]

null_count = df[col].isnull().sum()

print(f'Column {col} has {unique_count} unique values, most common value: {most_common_value}, & {null_count} null values')

print('')def date_features(df):

df['date'] = pd.to_datetime(df['Date_Time'], format='%d.%m.%Y %H:%M:%S')

df['year'] = df['date'].dt.year

df['month'] = df['date'].dt.month

df['week'] = df['date'].dt.isocalendar().week

df['quarter'] = df['date'].dt.quarter

df['day_of_year'] = df['date'].dt.dayofyear

df['day_of_week'] = df['date'].dt.dayofweek

df['hour'] = df['date'].dt.hour

df['minute'] = df['date'].dt.minute

df['second'] = df['date'].dt.second

df["month_sin"] = round(np.sin(2 * np.pi * df["month"] / 12), 2)

df["month_cos"] = round(np.cos(2 * np.pi * df["month"] / 12), 2)

df.drop('date', axis=1, inplace=True)

return df

df = date_features(df)

df.head(2)

数据分析

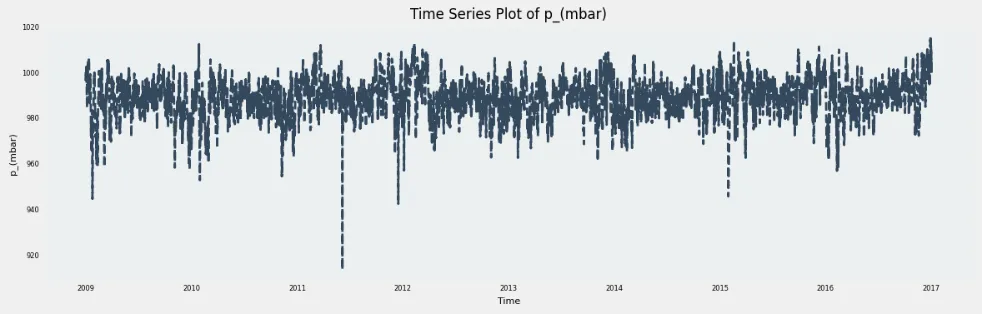

让我们随时间可视化每一列的特征,以更好地理解数据。

cols = ['p_(mbar)', 'T_(degC)', 'Tpot_(K)', 'Tdew_(degC)', 'rh_(%)', 'VPmax_(mbar)', 'VPact_(mbar)', 'VPdef_(mbar)', 'sh_(g/kg)', 'H2OC_(mmol/mol)', 'rho_(g/m**3)']

for col in cols:

df[col] = pd.to_numeric(df[col], errors='coerce')

df[col].fillna(df[col].mean(), inplace=True)

df[col] = df[col].astype(float)

plt.figure(figsize=(12, 4))

df[col].plot(subplots=True, linestyle='dashed', color='#34495e', linewidth=2, markersize=12, markerfacecolor='#f1c40f')

plt.title(f'Time Series Plot of {col}', fontsize=12)

plt.xlabel('Time', fontsize=8)

plt.ylabel(col, fontsize=8)

plt.tick_params(axis='both', labelsize=6)

plt.grid(False)

plt.gca().set_facecolor('#ecf0f1')

plt.tight_layout()

plt.show()

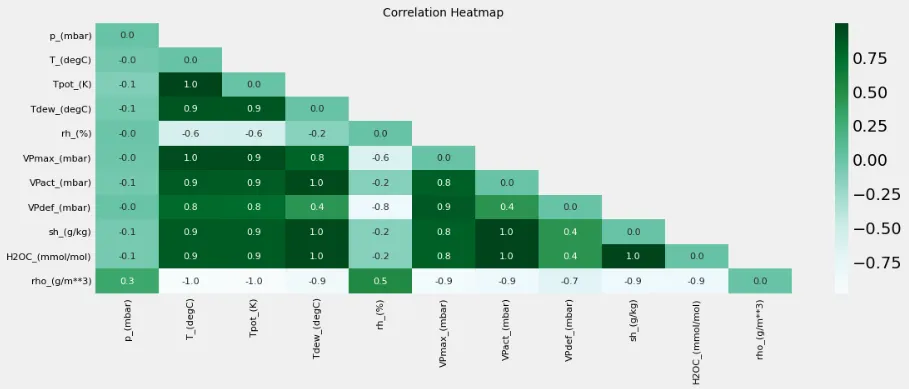

相关性热图

numeric_df = df[cols].select_dtypes(include=['number'])

df_corr = numeric_df.corr()

plt.figure(figsize=(12, 4))

mask = np.triu(np.ones(df_corr.shape), k=1)

np.fill_diagonal(df_corr.values, 0)

sns.heatmap(data=df_corr, annot=True, annot_kws={"fontsize": 8}, fmt='.1f', cmap='BuGn', mask=mask, cbar=True)

plt.title("Correlation Heatmap", fontsize=10)

plt.xticks(fontsize=8)

plt.yticks(fontsize=8)

plt.grid(False)

plt.savefig('correlation_heatmap.png')

plt.show()

观察结果:

空气压力、密度变量与天气温度之间存在强相关性。

特征工程

特征工程是为我们模型提供更多上下文信息的有效方法。我们将创建滞后特征、滚动平均值和标准差特征,以及安全百分比变化特征。

关键步骤:

- 滞后特征:捕捉目标变量的历史值,提供过去行为的上下文信息。

- 滚动平均值和标准差:捕捉目标变量在指定窗口内的平均值和变异性。

- 百分比变化:此特征捕捉目标变量的百分比变化,提供其变化率的洞察。

- 风向:将风向转换为正弦和余弦分量,以处理其周期性特质。

class FeatureEngineer(BaseEstimator, TransformerMixin):

def __init__(self, target_col='T_(degC)'):

self.target_col = target_col

def fit(self, X, y=None):

return self

def transform(self, X):

X_new = X.copy()

target = X_new[self.target_col]

# Lag Features

for days in [1, 7, 14]:

X_new[f'{self.target_col}_lag_{days}d'] = target.shift(days * 144)

# Rolling Features

for days in [1, 7, 14]:

window = days * 144

X_new[f'{self.target_col}_rollmean_{days}d'] = target.rolling(window, min_periods=1).mean()

X_new[f'{self.target_col}_rollstd_{days}d'] = target.rolling(window, min_periods=1).std()

# Safe Percentage Change

X_new[f'{self.target_col}_pct_change'] = target.pct_change().replace([np.inf, -np.inf], np.nan)

# Wind Direction

if 'wd_(deg)' in X.columns:

wd_rad = np.deg2rad(X_new['wd_(deg)'])

X_new['wd_sin'] = np.sin(wd_rad)

X_new['wd_cos'] = np.cos(wd_rad)

X_new = X_new.drop('wd_(deg)', axis=1)

X_new = X_new.replace([np.inf, -np.inf], np.nan)

X_new = X_new.fillna(method='ffill').fillna(method='bfill')

return X_new

feature_engineer = FeatureEngineer()

train_processed = feature_engineer.fit_transform(train_df)

val_processed = feature_engineer.transform(validation_df)

test_processed = feature_engineer.transform(test_df)

数据划分和缩放

将数据分为训练集、验证集和测试集,可以让我们评估模型在未见数据上的表现,确保模型具有良好的泛化能力。特征缩放对于提高模型的收敛速度和性能至关重要,特别是对于像LSTM这样的算法。

def train_val_test(df):

train_split = int(len(df) * 0.7)

validation_split = int(len(df) * 0.1)

train_df = df[:train_split]

validation_df = df[train_split: validation_split + train_split]

test_df = df[validation_split + train_split:]

return train_df, validation_df, test_df

train_df, validation_df, test_df = train_val_test(df)

def xy_splitter(train, validation, test):

target = 'T_(degC)'

X_train = train.drop(target, axis=1)

y_train = train[target]

X_test = test.drop(target, axis=1)

y_test = test[target]

X_val = validation.drop(target, axis=1)

y_val = validation[target]

print(f'Train shape: X_train {X_train.shape} & y_train {y_train.shape}')

print(f'Validation shape: X_val {X_val.shape} & y_val {y_val.shape}')

print(f'Test shape: X_test {X_test.shape} & y_test {y_test.shape}')

return X_train, y_train, X_val, y_val, X_test, y_test

X_train, y_train, X_val, y_val, X_test, y_test = xy_splitter(train_processed, val_processed, test_processed)

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_val = scaler.transform(X_val)

X_test = scaler.transform(X_test)

时间序列步骤

在时间序列生成器中,这一步对于将数据准备成适合训练LSTM模型的格式至关重要。回顾期决定了模型在做出预测时会考虑多少个之前的时间步。

look_back = 14

batch_size = 64

train_gen = TimeseriesGenerator(data=X_train, targets=y_train, length=look_back, batch_size=batch_size, shuffle=False)

val_gen = TimeseriesGenerator(data=X_val, targets=y_val, length=look_back, batch_size=batch_size, shuffle=False)

test_gen = TimeseriesGenerator(data=X_test, targets=y_test, length=look_back, batch_size=batch_size, shuffle=False)

建模

我们将使用TensorFlow和Keras构建一个LSTM模型。

LSTM网络由于其捕捉长期依赖关系的能力,非常适合时间序列预测。该架构包括dropout层,以防止过拟合,并确保模型对未见数据具有良好的泛化能力。

基本上,LSTM在神经网络中就像一个智能记忆系统,它使用“门”来有选择性地记住随时间推移的数据序列中的重要信息,从而能够捕捉长期依赖关系。它具有“遗忘门”、“输入门”和“输出门”,这些门协同工作以保留和移除必要的信息,帮助模型仅学习必要的信息。

n_features = X_train.shape[1]

model = Sequential()

model.add(LSTM(32, return_sequences=True, input_shape=(look_back, n_features)))

model.add(Dropout(0.2))

model.add(LSTM(32, return_sequences=False))

model.add(Dropout(0.2))

model.add(Dense(1))

model.compile(loss='mse', optimizer='adam')

callbacks = [

tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=10),

tf.keras.callbacks.ModelCheckpoint('best_model.keras', save_best_only=True)

]

model.summary()

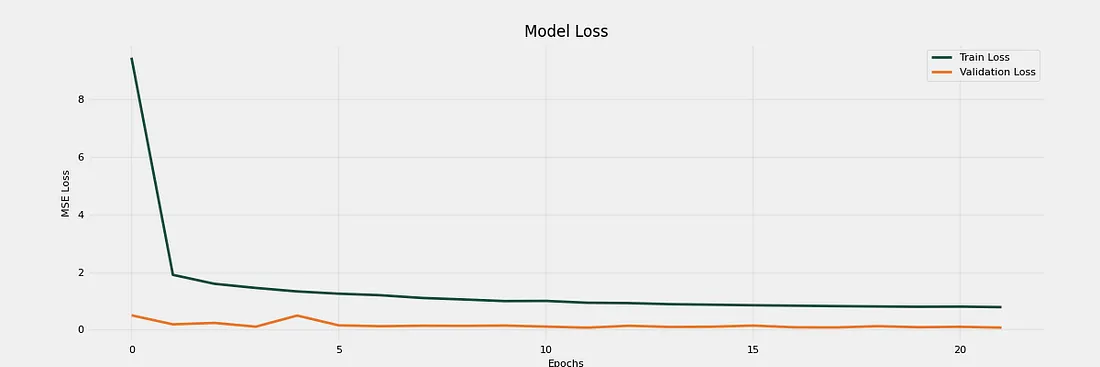

训练和评估

history = model.fit(train_gen, epochs=25, validation_data=val_gen, callbacks=callbacks)

plt.figure(figsize=(12, 4))

plt.plot(history.history['loss'], label='Train Loss', color='#1B4D3E', linewidth=2)

plt.plot(history.history['val_loss'], label='Validation Loss', color='#E9762B', linewidth=2)

plt.title('Model Loss', fontsize=12)

plt.xlabel('Epochs', fontsize=8)

plt.ylabel('MSE Loss', fontsize=8)

plt.xticks(fontsize=8)

plt.yticks(fontsize=8)

plt.legend(fontsize=8)

plt.grid(alpha=0.3)

plt.savefig('model_loss.png')

plt.show()

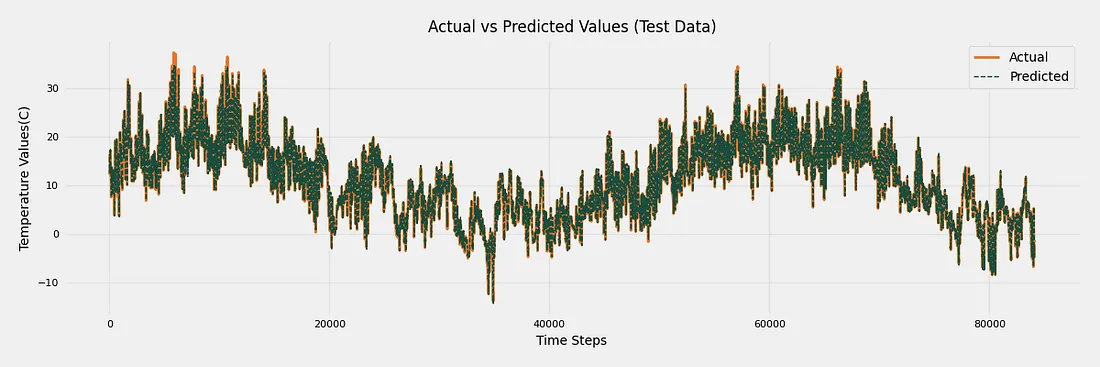

预测

best_model = load_model('best_model.keras')

test_preds = best_model.predict(test_gen)

plt.figure(figsize=(12, 4))

plt.plot(np.arange(len(y_test[look_back:])), y_test[look_back:], label='Actual', color='#E9762B', linewidth=2)

plt.plot(np.arange(len(test_preds)), test_preds, label='Predicted', color='#1B4D3E', linestyle='dashed', linewidth=1)

plt.title('Actual vs Predicted Values (Test Data)', fontsize=12)

plt.xlabel('Time Steps', fontsize=10)

plt.ylabel('Temperature Values (C)', fontsize=10)

plt.legend(fontsize=10)

plt.xticks(fontsize=8)

plt.yticks(fontsize=8)

plt.grid(alpha=0.3)

plt.tight_layout()

plt.savefig('prediction_performance.png')

plt.show()

因此,这是使用LSTM进行气候预测的工作流程。我们涵盖了数据加载、清洗、特征工程、模型构建、训练和评估。通过利用LSTM网络的强大功能,我们构建了一个稳健的模型,能够准确预测温度。

这种方法可以扩展到其他数据集,为连续和时间相关的变量提供有价值的见解和预测。

请随意尝试不同的架构、超参数和数据集,以进一步增强你的气候预测模型。