开发者必读:大型语言模型(LLM)运行与调试入门指南

运行大型语言模型(LLM)由于其硬件需求而面临重大挑战,但存在众多选项可以使这些强大工具变得触手可及。当前的环境提供了多种方法——从通过OpenAI和Anthropic等主要参与者提供的API使用模型,到通过Hugging Face和Ollama等平台部署开源替代方案。无论你是远程与模型交互还是在本地运行它们,了解关键技术(如提示工程和输出结构化)都可以显著提高特定应用的性能。本文探讨了实施LLM的实际方面,为开发人员提供了应对硬件限制、选择适当部署方法以及通过经证实的技术优化模型输出的知识。

使用LLM API:快速入门

LLM API提供了一种直接的方式来访问强大的语言模型,而无需管理基础设施。这些服务处理了复杂的计算需求,使开发人员能够专注于实施。在本教程中,我们将通过示例来理解这些LLM的实施,以更直接、以产品为导向的方式展示其高层潜力。为保持本教程的简洁性,我们在实施部分仅限于闭源模型,并在最后添加了开源模型的高层概述。

实施闭源LLM:基于API的解决方案

闭源LLM通过简单的API接口提供强大的功能,只需最少的基础设施即可交付最先进的性能。这些模型由OpenAI、Anthropic和Google等公司维护,为开发人员提供了可通过简单API调用访问的生产级智能。

让我们探索如何使用最易于访问的闭源API之一——Anthropic的API

# First, install the Anthropic Python library

!pip install anthropic

import anthropic

import os

client = anthropic.Anthropic(

api_key=os.environ.get("YOUR_API_KEY"), # Store your API key as an environment variable

)

应用:用于用户指南的上下文问题回答机器人

import anthropic

import os

from typing import Dict, List, Optional

class ClaudeDocumentQA:

"""

An agent that uses Claude to answer questions based strictly on the content

of a provided document.

"""

def __init__(self, api_key: Optional[str] = None):

"""Initialize the Claude client with API key."""

self.client = anthropic.Anthropic(

api_key="YOUR_API_KEY",

)

# Updated to use the correct model string format

self.model = "claude-3-7-sonnet-20250219"

def process_question(self, document: str, question: str) -> str:

"""

Process a user question based on document context.

Args:

document: The text document to use as context

question: The user's question about the document

Returns:

Claude's response answering the question based on the document

"""

# Create a system prompt that instructs Claude to only use the provided document

system_prompt = """

You are a helpful assistant that answers questions based ONLY on the information

provided in the DOCUMENT below. If the answer cannot be found in the document,

say "I cannot find information about this in the provided document."

Do not use any prior knowledge outside of what's explicitly stated in the document.

"""

# Construct the user message with document and question

user_message = f"""

DOCUMENT:

{document}

QUESTION:

{question}

Answer the question using only information from the DOCUMENT above. If the information

isn't in the document, say so clearly.

"""

try:

# Send request to Claude

response = self.client.messages.create(

model=self.model,

max_tokens=1000,

temperature=0.0, # Low temperature for factual responses

system=system_prompt,

messages=[

{"role": "user", "content": user_message}

]

)

return response.content[0].text

except Exception as e:

# Better error handling with details

return f"Error processing request: {str(e)}"

def batch_process(self, document: str, questions: List[str]) -> Dict[str, str]:

"""

Process multiple questions about the same document.

Args:

document: The text document to use as context

questions: List of questions to answer

Returns:

Dictionary mapping questions to answers

"""

results = {}

for question in questions:

results = self.process_question(document, question)

return results

### Test Code

if __name__ == "__main__":

# Sample document (an instruction manual excerpt)

sample_document = """

QUICKSTART GUIDE: MODEL X3000 COFFEE MAKER

SETUP INSTRUCTIONS:

1. Unpack the coffee maker and remove all packaging materials.

2. Rinse the water reservoir and fill with fresh, cold water up to the MAX line.

3. Insert the gold-tone filter into the filter basket.

4. Add ground coffee (1 tbsp per cup recommended).

5. Close the lid and ensure the carafe is properly positioned on the warming plate.

6. Plug in the coffee maker and press the POWER button.

7. Press the BREW button to start brewing.

FEATURES:

- Programmable timer: Set up to 24 hours in advance

- Strength control: Choose between Regular, Strong, and Bold

- Auto-shutoff: Machine turns off automatically after 2 hours

- Pause and serve: Remove carafe during brewing for up to 30 seconds

CLEANING:

- Daily: Rinse removable parts with warm water

- Weekly: Clean carafe and filter basket with mild detergent

- Monthly: Run a descaling cycle using white vinegar solution (1:2 vinegar to water)

TROUBLESHOOTING:

- Coffee not brewing: Check water reservoir and power connection

- Weak coffee: Use STRONG setting or add more coffee grounds

- Overflow: Ensure filter is properly seated and use correct amount of coffee

- Error E01: Contact customer service for heating element replacement

"""

# Sample questions

sample_questions = [

"How much coffee should I use per cup?",

"How do I clean the coffee maker?",

"What does error code E02 mean?",

"What is the auto-shutoff time?",

"How long can I remove the carafe during brewing?"

]

# Create and use the agent

agent = ClaudeDocumentQA()

# Process a single question

print("=== Single Question ===")

answer = agent.process_question(sample_document, sample_questions[0])

print(f"Q: {sample_questions[0]}")

print(f"A: {answer}\n")

# Process multiple questions

print("=== Batch Processing ===")

results = agent.batch_process(sample_document, sample_questions)

for question, answer in results.items():

print(f"Q: {question}")

print(f"A: {answer}\n")

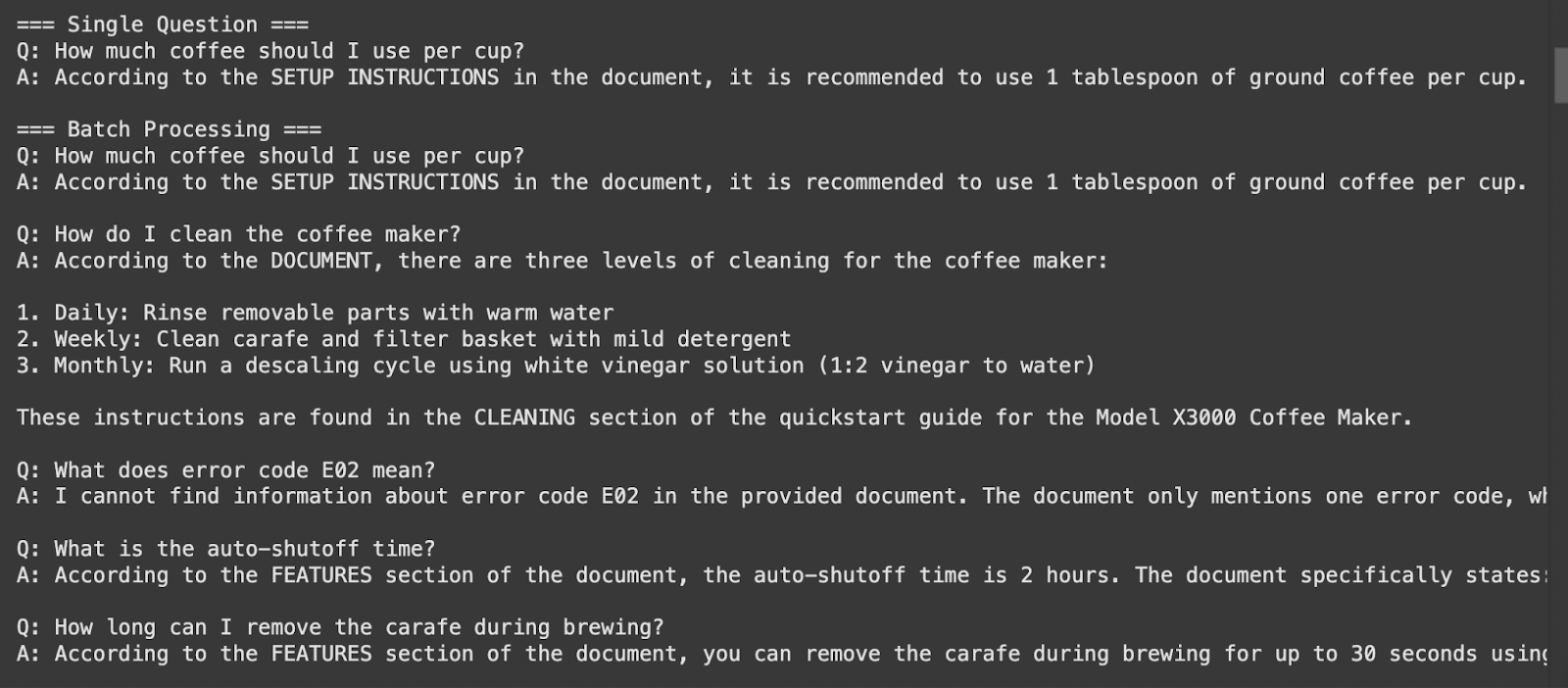

模型的输出

Claude文档问答:一种专门的LLM应用

这个Claude文档问答代理展示了LLM API在上下文感知问题回答中的实际应用。该应用使用Anthropic的Claude API创建了一个系统,该系统严格基于提供的文档内容来生成回答——这对于许多企业用例来说是一项至关重要的能力。

该代理通过将一个专门框架包裹在Claude强大的语言功能上来工作,该框架:

- 接受参考文档和用户问题作为输入

- 构建提示以区分文档上下文和查询

- 使用系统指令限制Claude仅使用文档中存在的信息

- 对文档中未找到的信息提供明确处理

- 支持单个和批量问题处理

这种方法对于需要与特定内容紧密相关的高保真回答的场景特别有价值,例如客户支持自动化、法律文档分析、技术文档检索或教育应用。该实现展示了如何通过仔细的提示工程和系统设计,将通用LLM转变为特定领域应用的专门工具。

通过结合简单的API集成和对模型行为的深思熟虑的限制,这个示例展示了开发人员如何无需昂贵的微调或复杂基础设施,就能构建可靠、上下文感知的AI应用。

注:这只是文档问答的基本实现,我们尚未深入探讨特定领域的复杂细节。

实施开源LLM:本地部署和适应性

开源LLM为闭源选项提供了灵活且可定制的替代方案,允许开发人员在自己的基础设施上部署模型,并完全控制实现细节。这些模型来自Meta(LLaMA)、Mistral AI和各种研究机构等组织,为不同的部署场景提供了性能与可访问性的平衡。

开源LLM实现的特点包括:

- 本地部署:模型可以在个人硬件或自我管理的云基础设施上运行

- 定制选项:能够为特定需求微调、量化或修改模型

- 资源扩展:可以根据可用的计算资源调整性能

- 隐私保护:数据保持在受控环境中,无需外部API调用

- 成本结构:一次性计算成本,而非按标记定价

主要的开源模型家族包括:

- LLaMA/Llama-2:Meta的强大基础模型,具有商业友好的许可

- Mistral:尽管参数数量较少,但性能强劲的模型

- Falcon:来自TII的训练高效模型,具有竞争力的性能

- Pythia:面向研究的模型,具有广泛的训练方法论文档

这些模型可以通过Hugging Face Transformers、llama.cpp或Ollama等框架部署,这些框架提供了抽象以简化实现,同时保留了本地控制的好处。虽然通常比基于API的替代方案需要更多的技术设置,但开源LLM在高容量应用的成本管理、数据隐私和特定领域需求的定制潜力方面具有优势。