使用LLM将非结构化文本转换为交互式知识图谱

知识图谱提供了一种强大的信息表示方式。它们由实体(节点)以及实体之间的关系(边)组成,与仅仅将信息视为无结构文本相比,这样能更轻松地看出事物之间的联系。

传统上,从原始文本构建知识图谱并非易事。这需要识别实体及其关系,编写手工编码的提取规则,或者使用专门的机器学习模型。然而,LLM非常灵活,可用于这一目的。LLM能够读取自由格式的文本并输出结构化信息,所以,正如我们将在本文中看到的那样,有可能将它们用作创建知识图谱的自动化流程的一部分。

在本文中,我们将探讨一个我创建的学习项目,其目标是创建一个由LLM驱动的流程,该流程可以接收无结构文本,并将其转化为一个交互式知识图谱网页。

简单示例

以下是这个项目功能的一个简单示例。当输入以下无结构文本时:

亨利是一位来自加拿大的才华横溢的音乐家,他最初在著名指挥家玛丽亚·罗德里格斯的指导下接受古典钢琴训练。后来,亨利和他的妹妹露西组建了一支名为 “枫叶乐队” 的摇滚乐队,露西曾在多伦多大学学习音乐作曲。“枫叶乐队” 于 2020 年 8 月 12 日发行了他们的首张专辑《北极光》,因融合了古典和摇滚风格而广受赞誉。露西还投身于环保活动,加入了 “清洁地球” 组织并成为一名地区大使,在那里她为争取更严格的野生动物保护法而奔走。亨利受到露西对慈善工作热情的启发,于是开始在家乡的当地动物收容所做志愿者。尽管亨利和露西起初在创意方面存在分歧,但最终他们找到了和谐的方式,将露西的古典作品与亨利的摇滚吉他连复段相结合。“枫叶乐队” 在 2021 年进行了欧洲巡演,在巴黎、柏林和罗马等主要城市的演出门票全部售罄。在巡演期间,亨利对国际美食产生了浓厚的兴趣,并与当地厨师合作拍摄了一部关于地方烹饪技巧的短纪录片。

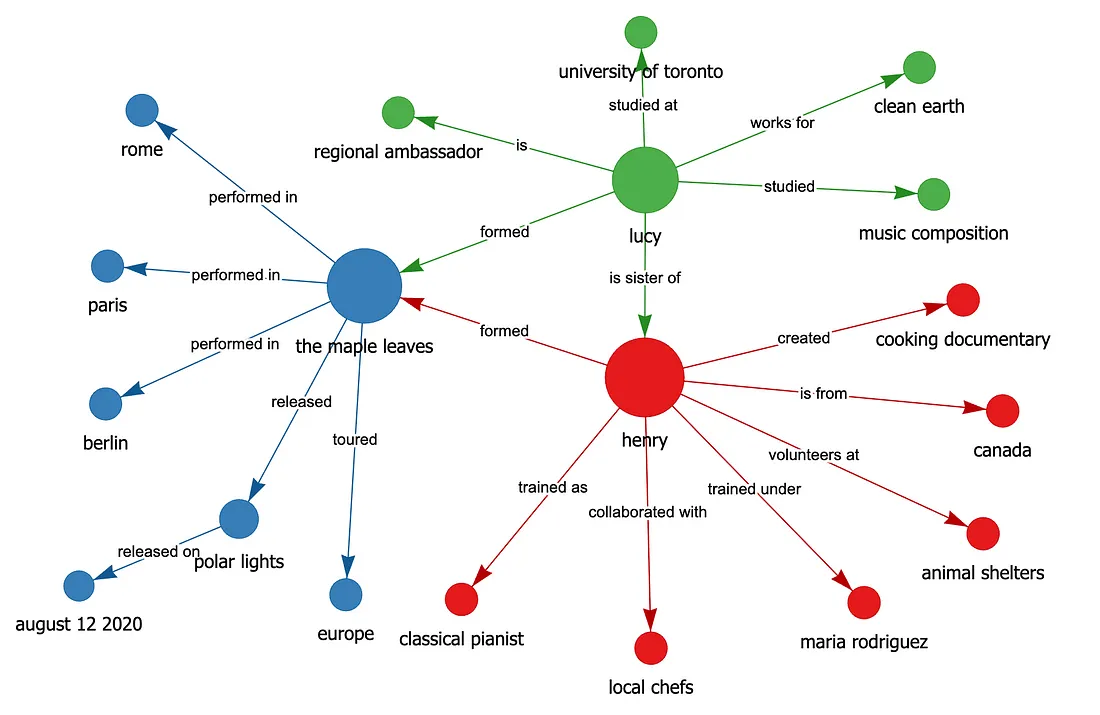

“ai-knowledge-graph” 工具将使用你选择并配置好的LLLM,从上述文本中提取知识,并创建一个知识图谱 HTML 作为输出,其外观将类似于以下图片:

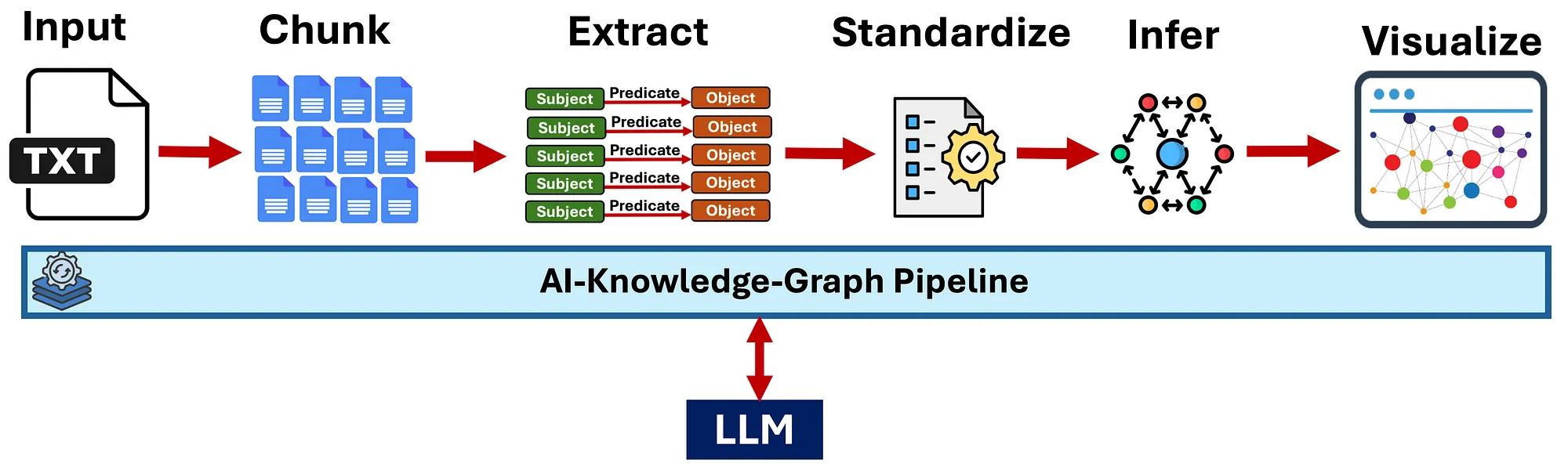

其工作原理的高级描述:

1. 文本分块:自动将大型文档分割成便于处理的小块。

2. 知识提取:然后,一个LLM会识别并提取每个文本块中的主语-谓语-宾语三元组(事实)。

3. 实体标准化:将对同一实体的不同表述(例如,“AI” 和 “人工智能”)统一为一个名称。

4. 关系推断:通过简单的逻辑规则(例如传递性)以及LLM的推理来推断额外的关系,从而连接不相连的子图。

5. 交互式可视化:生成的图谱会在你的浏览器中以交互式网络的形式显示。

详细工作原理

文本分块

LLM存在上下文窗口限制(并且本地系统也有内存限制)。为了处理大型文档,该工具会自动将文本分割成多个文本块(例如,每个文本块 500 个单词,且存在一定的重叠部分)。重叠部分有助于在边界句子处保持上下文连贯性。然后,会给每个文本块添加一个提示,指示LLM提取主谓宾(SPO)三元组,之后将其发送给LLM。

由LLM驱动的提取

对于每个文本块,该工具会要求LLM输出一个包含三元组的 JSON 数组,以及这些信息是从哪个文本块中提取出来的。下面是一个示例:

[

{

"subject": "eli whitney",

"predicate": "invented",

"object": "cotton gin",

"chunk": 1

},

{

"subject": "Industrial Revolution",

"predicate": "reshapes",

"object": "economic systems",

"chunk": 1

},

{

"subject": "amazon",

"predicate": "transformed",

"object": "retail",

"chunk": 3

}

]

该提示鼓励使用一致的实体命名、简短的关系短语(1到3个单词),并且不要使用代词指代。然后,将从所有文本块中提取的三元组合并成一个初始的原始知识图谱。

跨文本块标准化实体

提取完成后,你常常会发现同一实体有许多不同的表述形式(例如,“AI”、“A.I.”、“artificial intelligence”)。为了避免出现碎片化或重复的节点,该工具提供了一个实体标准化步骤。

- 基本规范化:将文本转换为小写、去除空白字符等操作,合并明显的重复内容。

- 标准化(可选):启用此功能后,LLM会将可能指代同一实体的不同表述归为一组。例如,“New York”(纽约)、“NYC”(纽约市)和“New York City”(纽约市)会变成一个规范的节点,“United States”(美国)、“U.S.”(美国)和“USA”(美国)会变成另一个规范节点。

这会提高图谱的连贯性,通常建议使用该功能。如果你需要严格的原始提取结果,可以在配置文件中禁用它。

推断隐藏连接以丰富图谱

即使彻底阅读文本,也可能无法捕捉到隐含的关系。该工具通过两种方式来解决这个问题:

基于规则的推断:

- 传递关系:如果A促成了B,而B推动了C,那么系统可以推断出A影响了C。

- 词汇相似性:名称相似的实体可能会通过一个通用的“与……相关”关系连接起来。

LLM辅助推断:

- 该工具可以提示LLM在原本不相连的子图之间提出连接关系。例如,如果一个集群与工业革命有关,另一个与人工智能有关,LLM可能会推断出一种历史或概念上的联系(“人工智能是始于工业革命的技术创新的产物”)。

- 这些边会用不同的方式标记(例如虚线),以便将它们与明确陈述的事实区分开来。

这个推断步骤通常会添加大量新的关系,大大减少孤立的子网络。如果你想要一个纯粹基于文本得出的图谱,可以在配置文件中禁用它。推断出的关系包含一个属性,用于表明该关系是推断出来的,而不是包含文本块编号。这个属性很重要,因为在可视化过程中,它将用于通过虚线边来指示推断出的关系。示例结构如下:

[

{

"subject": "electrification",

"predicate": "enables",

"object": "Manufacturing Automation",

"inferred": true

},

{

"subject": "tim berners-lee",

"predicate": "expanded via internet",

"object": "information sharing",

"inferred": true

}

]

LLM提示

如果所有选项都已启用,会向LLM发送四条提示信息。首先是初始的主谓宾(SPO)知识提取。

提取系统提示:

You are an advanced AI system specialized in knowledge extraction and knowledge graph generation.

Your expertise includes identifying consistent entity references and meaningful relationships in text.

CRITICAL INSTRUCTION: All relationships (predicates) MUST be no more than 3 words maximum. Ideally 1-2 words. This is a hard limit.

提取用户提示:

Your task: Read the text below (delimited by triple backticks) and identify all Subject-Predicate-Object (S-P-O) relationships in each sentence. Then produce a single JSON array of objects, each representing one triple.

Follow these rules carefully:

- Entity Consistency: Use consistent names for entities throughout the document. For example, if "John Smith" is mentioned as "John", "Mr. Smith", and "John Smith" in different places, use a single consistent form (preferably the most complete one) in all triples.

- Atomic Terms: Identify distinct key terms (e.g., objects, locations, organizations, acronyms, people, conditions, concepts, feelings). Avoid merging multiple ideas into one term (they should be as "atomistic" as possible).

- Unified References: Replace any pronouns (e.g., "he," "she," "it," "they," etc.) with the actual referenced entity, if identifiable.

- Pairwise Relationships: If multiple terms co-occur in the same sentence (or a short paragraph that makes them contextually related), create one triple for each pair that has a meaningful relationship.

- CRITICAL INSTRUCTION: Predicates MUST be 1-3 words maximum. Never more than 3 words. Keep them extremely concise.

- Ensure that all possible relationships are identified in the text and are captured in an S-P-O relation.

- Standardize terminology: If the same concept appears with slight variations (e.g., "artificial intelligence" and "AI"), use the most common or canonical form consistently.

- Make all the text of S-P-O text lower-case, even Names of people and places.

- If a person is mentioned by name, create a relation to their location, profession and what they are known for (invented, wrote, started, title, etc.) if known and if it fits the context of the informaiton.

Important Considerations:

- Aim for precision in entity naming - use specific forms that distinguish between similar but different entities

- Maximize connectedness by using identical entity names for the same concepts throughout the document

- Consider the entire context when identifying entity references

- ALL PREDICATES MUST BE 3 WORDS OR FEWER - this is a hard requirement

Output Requirements:

- Do not include any text or commentary outside of the JSON.

- Return only the JSON array, with each triple as an object containing "subject", "predicate", and "object".

- Make sure the JSON is valid and properly formatted.

还有另外三条未在此列出的提示信息,它们用于指导LLM进行实体标准化和关系推断。

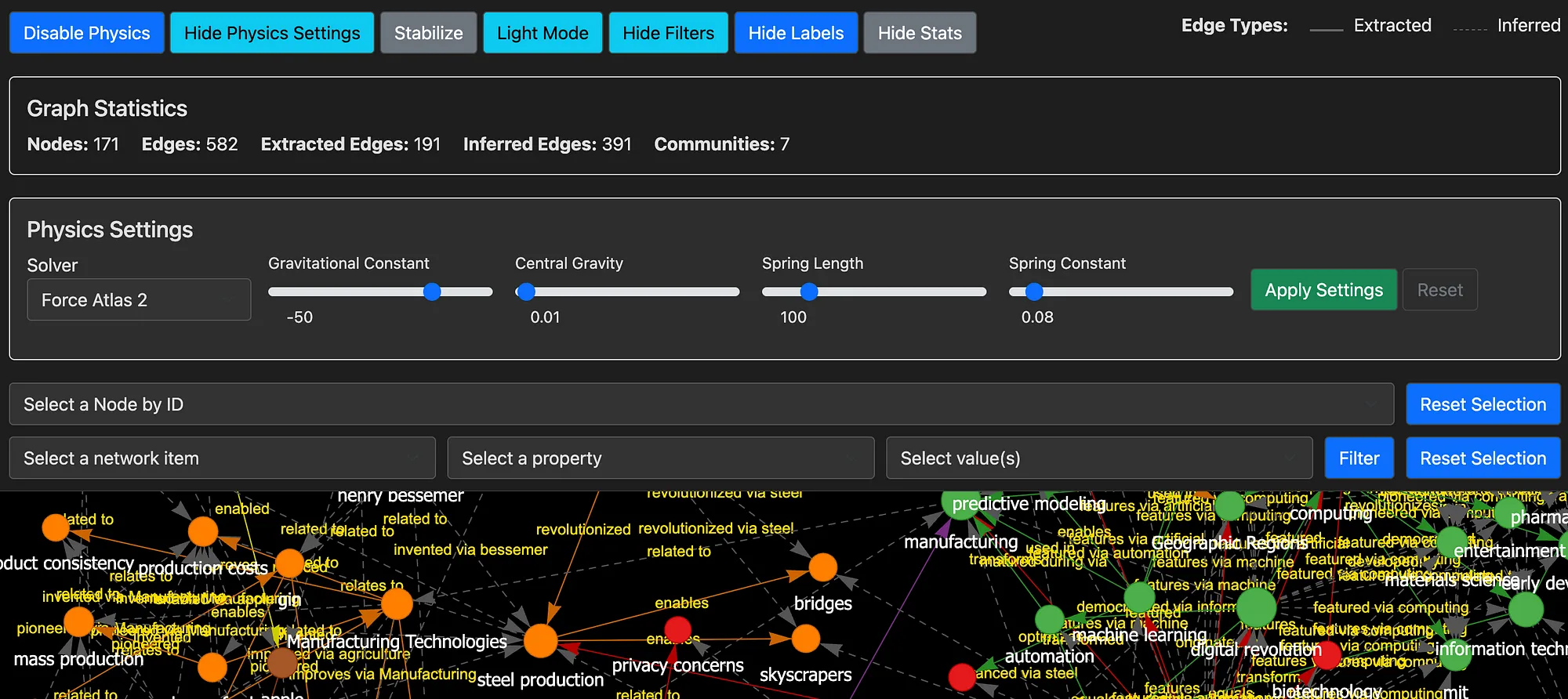

交互式图谱可视化

利用完整的主谓宾(SPO)三元组列表(包括原始的和推断出来的),该工具会使用PyVis(Vis.js的Python接口)生成一个交互式的HTML可视化图谱。在浏览器中打开生成的文件,你会看到:

- 按颜色编码的分组:同一组中的节点颜色相同。这些分组通常对应文本中的子主题或主题。

- 按重要性调整节点大小:连接较多(或处于中心位置较重要)的节点会显示得更大。

- 边的样式:实线表示从文本中提取的关系,虚线表示推断出来的关系。

- 交互式控件:可以进行平移、缩放、拖动节点、切换物理效果、切换亮色/暗色模式以及过滤视图等操作。

这使得以一种具有视觉吸引力的格式来探索关系变得更加容易。

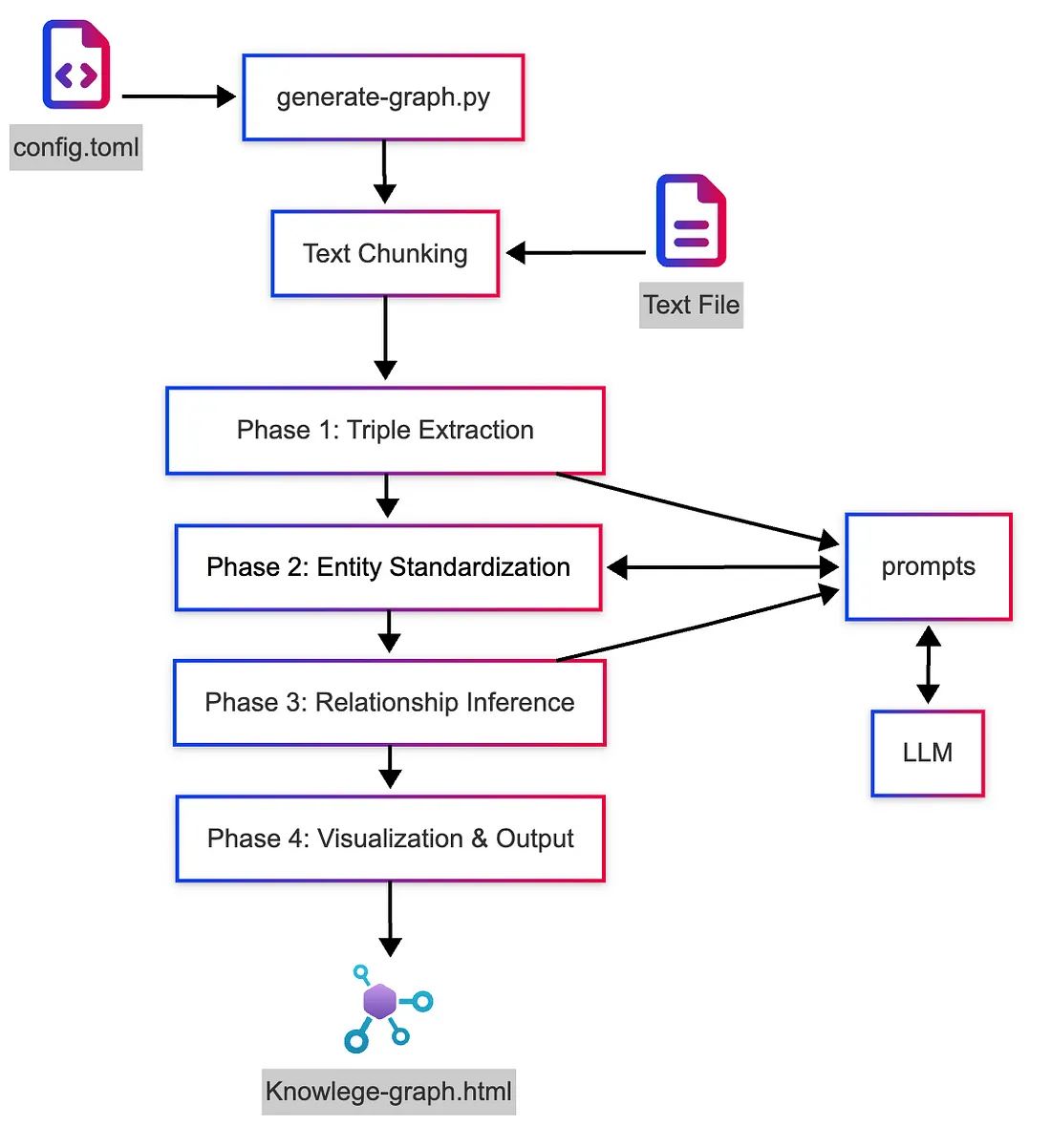

程序流程

以下是该程序的基本流程(代码仓库的README.md文件中有更为详细的程序流程图):

安装AI知识图谱

要想在你的计算机上运行这个程序,你需要满足以下要求:

要求:

- 一台可运行程序的计算机(Windows、Linux或MacOS系统)

- 已安装Python(建议安装3.12或更高版本)

- 能够访问与OpenAI兼容的API端点(如Ollama、LiteLLM、LM Studio、OpenAI订阅服务等)

- 安装有Git,用于克隆代码仓库

下载并安装依赖项:

将代码仓库克隆到你要运行程序的系统上,然后切换到该目录:

git clone https://github.com/robert-mcdermott/ai-knowledge-graph.git

cd ai-knowledge-graph

使用 `uv` 安装依赖项:

uv sync

或者使用 `pip` 进行安装:

pip install -r requirements.txt

配置AI知识图谱

编辑 `config.toml` 文件以适应你的情况。在这里,你可以配置LLM、端点(网址)、LLM的最大内容窗口长度以及温度参数。在下面的示例中,我使用的是通过Ollama在本地计算机上托管的谷歌开源的Gemma 3模型。你还可以在此处调整文档分块的大小和重叠部分,此外,如果你希望对实体进行标准化处理,并通过推理来建立更多的关系,也可以在这里进行设置。

[llm]

model = "gemma3"

api_key = "sk-1234"

base_url = "http://localhost:11434/v1/chat/completions"

max_tokens = 8192

temperature = 0.2

[chunking]

chunk_size = 200 # Number of words per chunk

overlap = 20 # Number of words to overlap between chunks

[standardization]

enabled = true # Whether to enable entity standardization

use_llm_for_entities = true # Whether to use LLM for additional entity resolution

[inference]

enabled = true # Whether to enable relationship inference

use_llm_for_inference = true # Whether to use LLM for relationship inference

apply_transitive = true # Whether to apply transitive inference rules

[visualization]

edge_smooth = false # Smooth edge lines true or false

创建知识图谱

既然你已经安装并配置好了 “ai-knowledge-graph”,使其指向你的LLM,那么你就可以创建你的第一个知识图谱了。获取一份你想要基于其创建知识图谱的纯文本文档(目前该工具仅适用于文本文档)。

接下来,你需要运行 `generate-graph.py` 脚本。以下是该脚本的帮助信息:

usage: generate-graph.py [-h] [--test] [--config CONFIG] [--output OUTPUT] [--input INPUT] [--debug] [--no-standardize] [--no-inference]

Knowledge Graph Generator and Visualizer

options:

-h, --help show this help message and exit

--test Generate a test visualization with sample data

--config CONFIG Path to configuration file

--output OUTPUT Output HTML file path

--input INPUT Path to input text file (required unless --test is used)

--debug Enable debug output (raw LLM responses and extracted JSON)

--no-standardize Disable entity standardization

--no-inference Disable relationship inference

以下是一个使用当前目录中名为 `mydocument.txt` 的文本文档创建知识图谱的示例(如果你使用的是 `uv`,请将 “python” 替换为 “uv run”):

python generate-graph.py --input mydocument.txt --output mydocument.html

以下是上述命令完整运行流程在控制台中显示的输出内容:

python generate-graph.py --input mydocument.txt --output mydocument.html

Using input text from file: mydocument.txt

==================================================

PHASE 1: INITIAL TRIPLE EXTRACTION

==================================================

Processing text in 3 chunks (size: 500 words, overlap: 50 words)

Processing chunk 1/3 (500 words)

Processing chunk 2/3 (500 words)

Processing chunk 3/3 (66 words)

Extracted a total of 73 triples from all chunks

==================================================

PHASE 2: ENTITY STANDARDIZATION

==================================================

Starting with 73 triples and 106 unique entities

Standardizing entity names across all triples...

Applied LLM-based entity standardization for 15 entity groups

Removed 8 self-referencing triples

Standardized 106 entities into 101 standard forms

After standardization: 65 triples and 72 unique entities

==================================================

PHASE 3: RELATIONSHIP INFERENCE

==================================================

Starting with 65 triples

Top 5 relationship types before inference:

- pioneered: 9 occurrences

- invented: 7 occurrences

- developed: 6 occurrences

- develops: 6 occurrences

- was: 4 occurrences

Inferring additional relationships between entities...

Identified 18 disconnected communities in the graph

Inferred 27 new relationships between communities

Inferred 30 new relationships between communities

Inferred 6 new relationships within communities

Inferred 8 relationships based on lexical similarity

Added 51 inferred relationships

Top 5 relationship types after inference:

- invented: 7 occurrences

- pioneered: 6 occurrences

- developed: 6 occurrences

- develops: 6 occurrences

- related to: 6 occurrences

Added 57 inferred relationships

Final knowledge graph: 116 triples

Saved raw knowledge graph data to mydocument.json

Processing 116 triples for visualization

Found 72 unique nodes

Found 55 inferred relationships

Detected 12 communities using Louvain method

Knowledge graph visualization saved to mydocument.html

Knowledge Graph Statistics:

Nodes: 72

Edges: 116 (55 inferred)

Communities: 12

To view the visualization, open the following file in your browser:

file:///Users/robertm/mycode/ai-knowledge-graph/mydocument.html

现在,在你的网络浏览器中打开生成的 HTML 文件,以探索知识图谱。

然后,你可以使用页面顶部的菜单展开控制面板,以调整布局的物理效果,隐藏或显示节点/边的标签,查看图谱统计信息,或者选择/过滤节点和边。还有暗黑模式,如下例所示:

尝试不同的设置很重要

尝试不同的分块大小、重叠大小以及LLM,看看会产生怎样的差异,这是个不错的主意。我通常将重叠部分设置为分块大小的 10%。较小的文档分块大小(100 到 200 字左右)似乎比较大的分块大小能够提取出更多的关系,但这可能会导致其他事物与那些分散在各个小分块中的概念或群组之间的关系变少。你需要通过试验来找到合适的分块大小和模型。我也确信,通过调整提示信息,还能进行许多优化工作。