模型:

castorini/aggretriever-cocondenser

英文

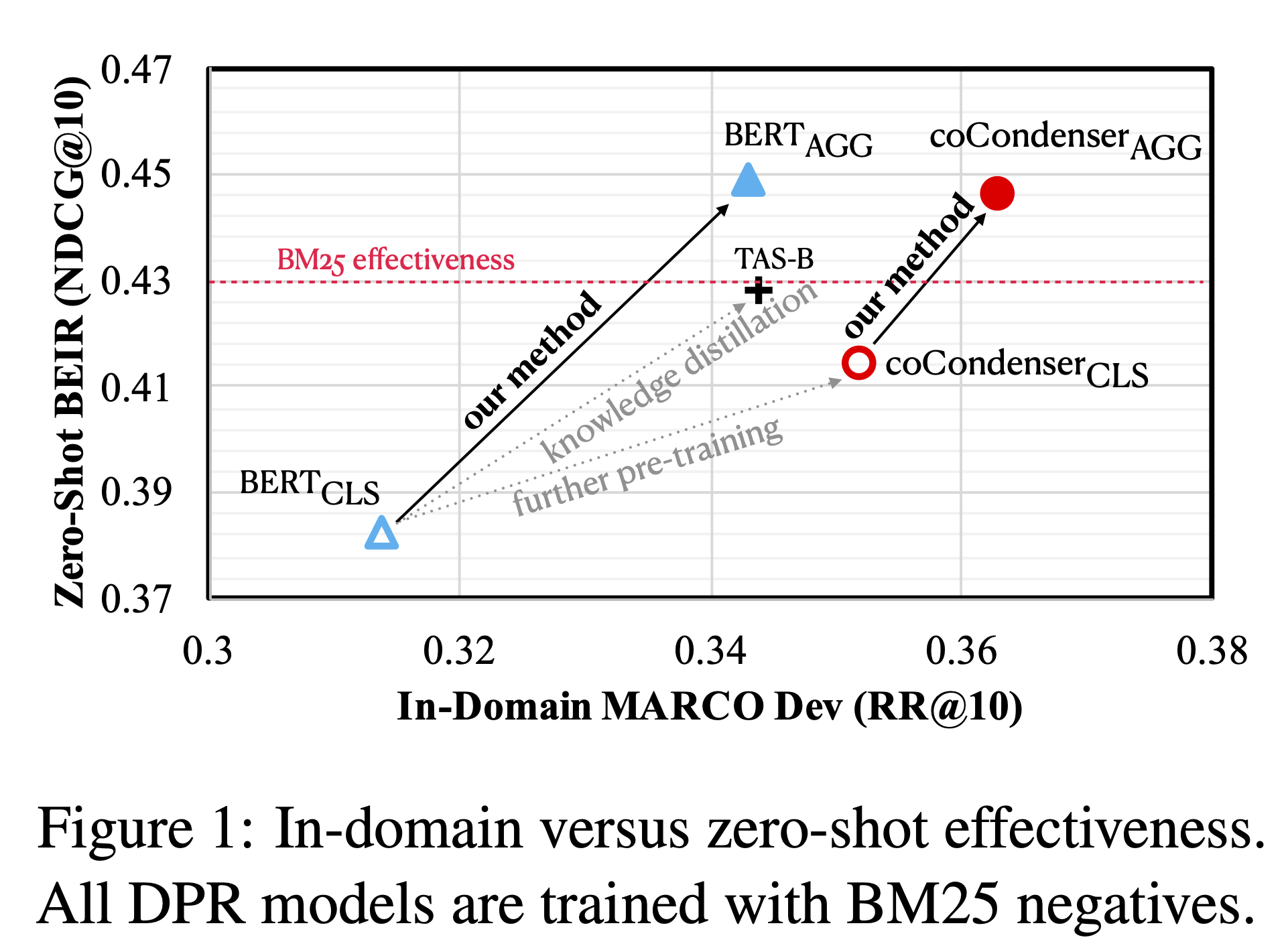

英文Aggretriever是一个编码器,将词汇和语义文本信息聚合到一个单一向量的密集向量中,用于密集检索,它是在MS MARCO语料库上使用BM25负采样进行微调的,遵循了 Aggretriever: A Simple Approach to Aggregate Textual Representation for Robust Dense Passage Retrieval 中所描述的方法。

有关微调的关联GitHub存储库可在此处找到 here ,使用pyserini进行复现的代码在[这里]。还提供了以下变体:

| Model | Initialization | MARCO Dev | Encoder Path |

|---|---|---|---|

| aggretriever-distilbert | distilbert-base-uncased | 34.1 | 1233321 |

| aggretriever-cocondenser | Luyu/co-condenser-marco | 36.2 | 1234321 |

使用方法(HuggingFace Transformers)

直接使用HuggingFace transformers中可用的模型。我们使用了来自pyserini的实现的Aggretriever here 。

from pyserini.encode._aggretriever import AggretrieverQueryEncoder

from pyserini.encode._aggretriever import AggretrieverDocumentEncoder

model_name = '/store/scratch/s269lin/experiments/aggretriever/hf_model/aggretriever-cocondenser'

query_encoder = AggretrieverQueryEncoder(model_name, device='cpu')

context_encoder = AggretrieverDocumentEncoder(model_name, device='cpu')

query = ["Where was Marie Curie born?"]

contexts = [

"Maria Sklodowska, later known as Marie Curie, was born on November 7, 1867.",

"Born in Paris on 15 May 1859, Pierre Curie was the son of Eugène Curie, a doctor of French Catholic origin from Alsace."

]

# Compute embeddings: take the last-layer hidden state of the [CLS] token

query_emb = query_encoder.encode(query)

ctx_emb = context_encoder.encode(contexts)

# Compute similarity scores using dot product

score1 = query_emb @ ctx_emb[0] # 45.56658

score2 = query_emb @ ctx_emb[1] # 45.81762