模型:

google/ul2

英文

英文介绍

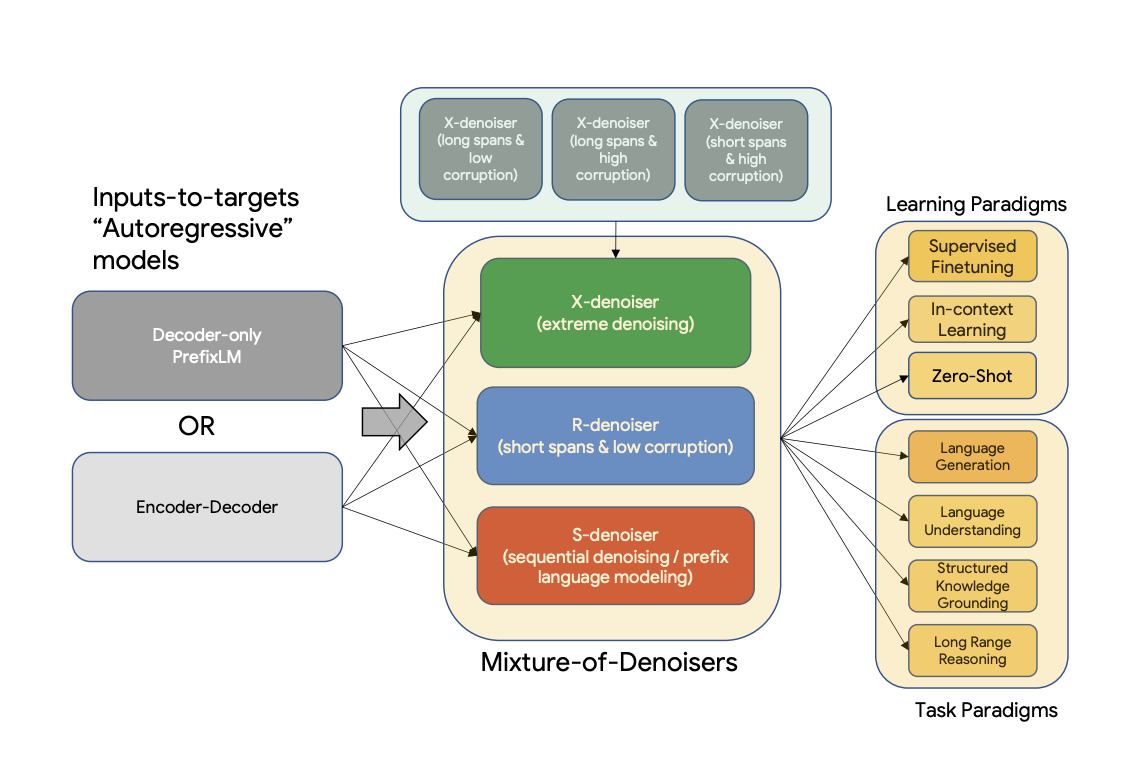

UL2是一个统一的框架,用于预训练模型,这些模型在数据集和设置上普遍有效。UL2使用混合去噪器(MoD)作为预训练目标,将不同的预训练范例结合在一起。UL2引入了一种模式切换的概念,其中下游微调与特定的预训练方案相关联。

摘要

现有的预训练模型通常针对特定类别的问题。迄今为止,关于正确的架构和预训练设置,还没有达成共识。本文提出了一个统一的框架,用于预训练在数据集和设置上普遍有效的模型。我们首先对架构原型和预训练目标进行了解耦,这两个概念通常被混淆。接下来,我们提出了自我监督在NLP中的通用和统一视角,并展示了不同的预训练目标如何相互关联,以及在不同目标之间插值如何有效。然后,我们提出了混合去噪器(MoD)作为一种将多种预训练范例结合在一起的预训练目标。我们还引入了一种模式切换的概念,其中下游微调与特定的预训练方案相关联。我们进行了大量的消融实验,比较了多种预训练目标,并发现我们的方法通过在多种不同设置上优于T5和/或类似GPT模型来推动了帕累托边界。最后,通过将我们的模型扩展到20B参数,我们在50个建立良好的监督NLP任务上实现了SOTA性能,包括语言生成(自动化和人工评估)、语言理解、文本分类、问题回答、常识推理、长文本推理、结构性知识基础和信息检索。我们的模型在上下文学习方面也取得了强大的结果,在零-shot SuperGLUE上超过了175B GPT-3,并将T5-XXL在一次性摘要上的性能提高了三倍。

更多信息,请参阅原始论文。

论文: Unifying Language Learning Paradigms

作者: Yi Tay、Mostafa Dehghani、Vinh Q. Tran、Xavier Garcia、Dara Bahri、Tal Schuster、Huaixiu Steven Zheng、Neil Houlsby、Donald Metzler

训练

该检查点在C4上进行了迭代的预训练,并在多个数据集上进行了微调

预训练

该模型在C4语料库上进行了预训练。预训练时,模型在C4上训练了总共1万亿个标记(200万个步骤),批量大小为1024. 输入和输出的序列长度设置为512/512. 预训练时的丢失率设为0。预训练花费了略多于一个月的时间,训练了约1万亿个标记的模型。该模型有32个编码器层和32个解码器层,dmodel为4096,df为16384。每个头部的维度为256,总共有16个头部。我们的模型使用了8的模型并行性。使用与T5相同的句子片段分词器,词汇大小为32000(点击这里了解有关T5分词器的更多信息)。

UL-20B可以理解为一种与T5非常相似但使用不同目标和稍微不同的缩放参数进行训练的模型。UL-20B使用了 Jax 和 T5X 的基础设施进行训练。

在预训练期间的训练目标是不同去噪策略的混合,下面解释了这些策略:

混合去噪器

引用论文中的话:

我们猜想,强大的通用模型必须在预训练阶段接触到解决多种问题的多样性。鉴于预训练是通过自我监督完成的,我们认为这种多样性应注入到模型的目标中,否则模型可能会缺乏某种特定能力,比如生成长连贯的文本。为此,我们定义了三种主要的范式,在预训练中使用了这些范式:

-

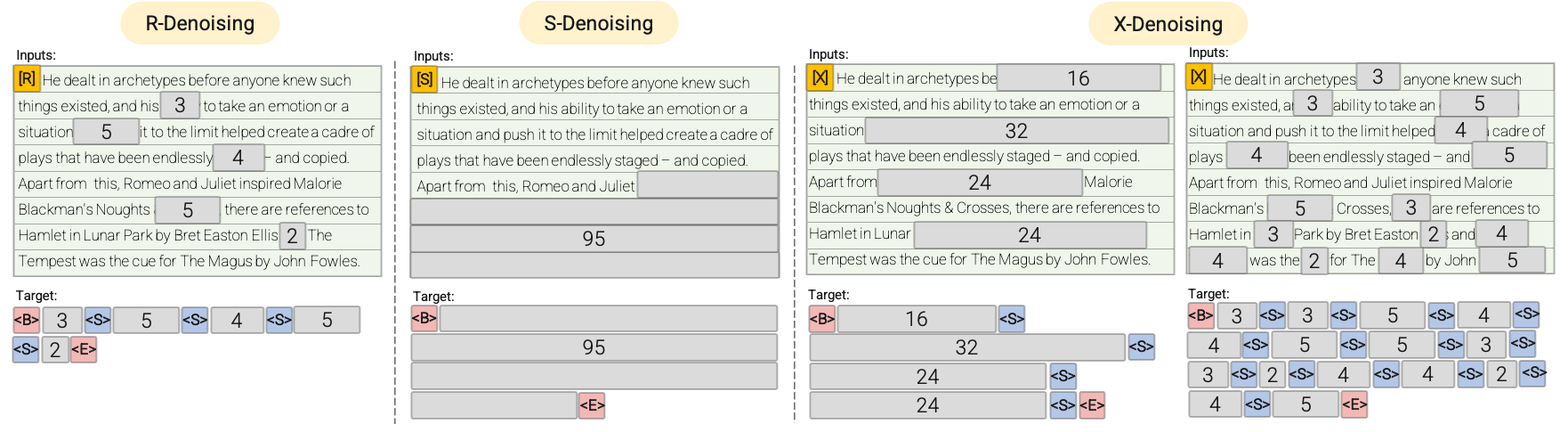

R-Denoiser:常规去噪是在 T5 中引入的标准跨度损坏,使用2到5个标记的跨度范围,将大约15%的输入标记屏蔽。这些跨度很短,可能有助于获取知识而不是学习生成流畅的文本。

-

S-Denoiser:这是一种特殊情况的去噪,我们观察到在定义输入到目标任务时存在严格的顺序,即前缀语言建模。为此,我们将输入序列分成两个子序列,作为上下文和目标,这样目标就不依赖于未来的信息。与标准的跨度损坏不同,其中可能存在比上下文标记更早位置的目标标记。注意,与前缀语言建模类似,上下文(前缀)保留了双向感受野。我们注意到,具有非常短的记忆或无记忆的S-Denoising在精神上类似于标准的因果语言建模。

-

X-Denoiser:这是去噪的一个极端版本,其中模型必须从有限信息中恢复出大部分输入。这模拟了模型需要从相对有限的信息中生成长目标的情况。为此,我们选择包括一个区域损坏的示例,其中大约有50%的输入序列被屏蔽。这是通过增加跨度长度和/或损坏率来实现的。如果预训练任务具有长跨度(例如≥12个标记)或具有较大的损坏率(例如≥30%),则我们认为X-denoising是极端的。 X-denoising的动机是它在常规跨度损坏和语言模型之间进行插值。

请参考以下图表以获得更直观的解释:

重要提示:更多详细信息,请参阅 paper 的第3.1.2节。

微调

在N个预训练步骤之后,模型经过连续的微调,其中N通常从50k到100k。换句话说,每经过Nk个预训练步骤,模型就在每个下游任务上进行微调。请参阅 paper 的第5.2.2节,了解所有用于微调的数据集的概述。

由于模型是连续微调的,一旦某个任务达到了最先进水平,微调就会停止,以节省计算资源。总的来说,模型训练了265万个步骤。

重要提示:更多详细信息,请参阅 paper 的第5.2.1节和5.2.2节。

贡献

此模型由 Daniel Hesslow 贡献

示例

以下示例演示了如何使用不同的去噪策略来预测掩码段落。由于模型的大小,以下示例需要在至少40GB的A100 GPU上运行。

S-Denoising

对于 S-Denoising,请确保输入的文本以前缀[S2S]开始,如下所示。

from transformers import T5ForConditionalGeneration, AutoTokenizer

import torch

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

input_string = "[S2S] Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, solid man with a bald head. Mrs. Dursley was thin and blonde and more than the usual amount of neck, which came in very useful as she spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere <extra_id_0>"

inputs = tokenizer(input_string, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(inputs, max_length=200)

print(tokenizer.decode(outputs[0]))

# -> <pad>. Dudley was a very good boy, but he was also very stupid.</s>

R-Denoising

对于 R-Denoising,请确保输入的文本以前缀[NLU]开始,如下所示。

from transformers import T5ForConditionalGeneration, AutoTokenizer

import torch

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

input_string = "[NLU] Mr. Dursley was the director of a firm called <extra_id_0>, which made <extra_id_1>. He was a big, solid man with a bald head. Mrs. Dursley was thin and <extra_id_2> of neck, which came in very useful as she spent so much of her time <extra_id_3>. The Dursleys had a small son called Dudley and <extra_id_4>"

inputs = tokenizer(input_string, return_tensors="pt", add_special_tokens=False).input_ids.to("cuda")

outputs = model.generate(inputs, max_length=200)

print(tokenizer.decode(outputs[0]))

# -> "<pad><extra_id_0> Burrows<extra_id_1> brooms for witches and wizards<extra_id_2> had a lot<extra_id_3> scolding Dudley<extra_id_4> a daughter called Petunia. Dudley was a nasty, spoiled little boy who was always getting into trouble. He was very fond of his pet rat, Scabbers.<extra_id_5> Burrows<extra_id_3> screaming at him<extra_id_4> a daughter called Petunia</s>

"

X-Denoising

对于 X-Denoising,请确保输入的文本以前缀[NLG]开始,如下所示。

from transformers import T5ForConditionalGeneration, AutoTokenizer

import torch

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

input_string = "[NLG] Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, solid man wiht a bald head. Mrs. Dursley was thin and blonde and more than the usual amount of neck, which came in very useful as she

spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere. <extra_id_0>"

model.cuda()

inputs = tokenizer(input_string, return_tensors="pt", add_special_tokens=False).input_ids.to("cuda")

outputs = model.generate(inputs, max_length=200)

print(tokenizer.decode(outputs[0]))

# -> "<pad><extra_id_0> Burrows<extra_id_1> a lot of money from the manufacture of a product called '' Burrows'''s ''<extra_id_2> had a lot<extra_id_3> looking down people's throats<extra_id_4> a daughter called Petunia. Dudley was a very stupid boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat,"