模型:

microsoft/conditional-detr-resnet-50

英文

英文条件DETR模型,使用ResNet-50作为主干

条件DEtection TRansformer(DETR)模型通过COCO 2017物体检测(118k个带注释的图像)进行了端到端训练。该模型在Meng等人的论文《 Conditional DETR for Fast Training Convergence 》中提出,并在《 this repository 》中首次发布。

模型描述

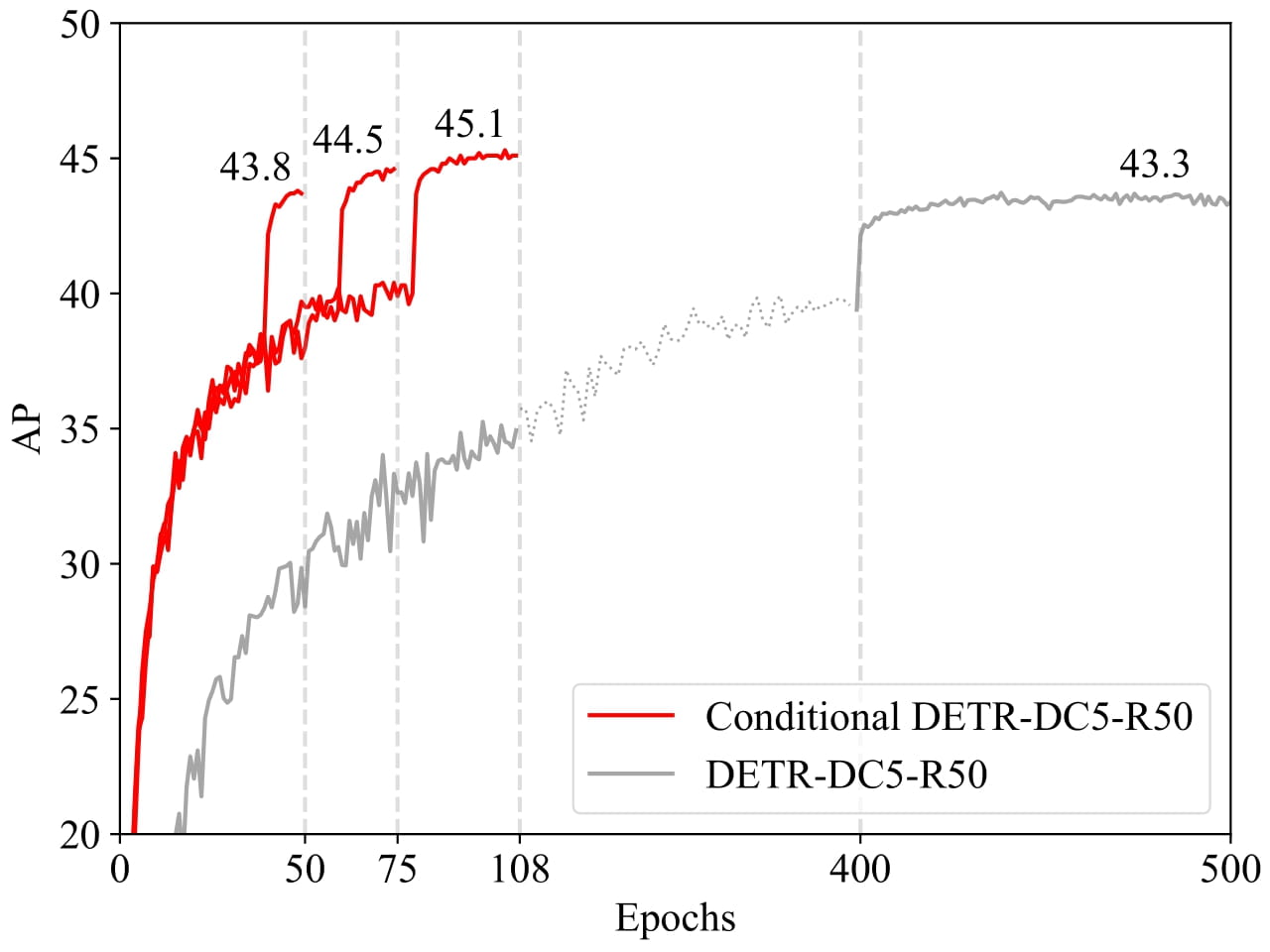

最近发展的DETR方法将Transformer编码器和解码器架构应用于物体检测,并取得了令人期待的性能。在本文中,我们处理了关键问题——训练收敛速度慢,并提出了一种用于快速DETR训练的条件交叉注意机制。我们的方法是由于DETR中的交叉注意高度依赖于内容嵌入来定位四个极点和预测边界框,这增加了对高质量内容嵌入的需求,从而增加了训练难度。我们的方法名为条件DETR,它从解码器嵌入中学习条件空间查询以进行解码器多头交叉注意。好处在于通过条件空间查询,每个交叉注意头都能够关注包含不同区域的带状区域,例如一个对象的极点或对象框内部的区域。这缩小了用于定位对象分类和框回归的不同区域的空间范围,从而减轻了对内容嵌入的依赖性并降低了训练难度。实证结果表明,条件DETR对于R50和R101主干收敛速度提高了6.7倍,对于更强的主干DC5-R50和DC5-R101提高了10倍。

使用目的和限制

您可以使用原始模型进行物体检测。查看《 model hub 》以查找所有可用的条件DETR模型。

如何使用

以下是如何使用此模型:

from transformers import AutoImageProcessor, ConditionalDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("microsoft/conditional-detr-resnet-50")

model = ConditionalDetrForObjectDetection.from_pretrained("microsoft/conditional-detr-resnet-50")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

这应该输出:

Detected remote with confidence 0.833 at location [38.31, 72.1, 177.63, 118.45] Detected cat with confidence 0.831 at location [9.2, 51.38, 321.13, 469.0] Detected cat with confidence 0.804 at location [340.3, 16.85, 642.93, 370.95]

目前,特征提取器和模型都支持PyTorch。

训练数据

条件DETR模型在《 COCO 2017 object detection 》上进行了训练,该数据集包含118k/5k个带注释的训练/验证图像。

BibTeX条目和引用信息

@inproceedings{MengCFZLYS021,

author = {Depu Meng and

Xiaokang Chen and

Zejia Fan and

Gang Zeng and

Houqiang Li and

Yuhui Yuan and

Lei Sun and

Jingdong Wang},

title = {Conditional {DETR} for Fast Training Convergence},

booktitle = {2021 {IEEE/CVF} International Conference on Computer Vision, {ICCV}

2021, Montreal, QC, Canada, October 10-17, 2021},

}